You can run llama 3 in lm studio, either using a chat. This llama craft is easy enough for sewing beginners, and it’s really. They come in two sizes: Web llama 3 template — special tokens. Web my usecase is using server from llama.cpp and my custom python code calling it, but unfortunately llama.cpp server executable currently doesn't support custom.

The llama 3 release introduces 4 new open llm models by meta based on the llama 2 architecture. Meta llama 3, a family of models developed by meta inc. It’s hampered by a tiny context window that prevents you from using it for truly. Web the llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry. You can run llama 3 in lm studio, either using a chat.

Web in chat, intelligence and instruction following are essential, and llama 3 has both. Using google colab and huggingface. Meta llama 3, a family of models developed by meta inc. Web what’s new with llama 3? Web prompt engineering is a technique used in natural language processing (nlp) to improve the performance of the language model by providing them with more context and.

Building a chatbot using llama 3. This llama craft is easy enough for sewing beginners, and it’s really. Metaai released the next generation of their llama models, llama 3. Web the llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry. Web in chat, intelligence and instruction following are essential, and llama 3 has both. Web llms are usually trained with specific predefined templates, which should then be used with the model’s tokenizer for better results when doing inference tasks. The llama 3 release introduces 4 new open llm models by meta based on the llama 2 architecture. Web lm studio team. Code to generate this prompt format can be found here. This version, with 405 billion. You can run llama 3 in lm studio, either using a chat. In this tutorial, we’ll cover what you need to know to get you quickly started on preparing your. Web my usecase is using server from llama.cpp and my custom python code calling it, but unfortunately llama.cpp server executable currently doesn't support custom. Web 其中,instruction 是用户指令,告知模型其需要完成的任务;input 是用户输入,是完成用户指令所必须的输入内容;output 是模型应该给出的输出。 即我们的核心训练目标是. The most capable openly available llm to date.

Web Meta Ai, Built With Llama 3 Technology, Is Now One Of The World’s Leading Ai Assistants That Can Boost Your Intelligence And Lighten Your Load—Helping You Learn, Get.

Web llms are usually trained with specific predefined templates, which should then be used with the model’s tokenizer for better results when doing inference tasks. Web use felt, pom poms, and our free printable felt llama pattern to make this adorable plushie. Meta llama 3 is the latest in meta’s. You can run llama 3 in lm studio, either using a chat.

Web The Llama 3 Instruction Tuned Models Are Optimized For Dialogue Use Cases And Outperform Many Of The Available Open Source Chat Models On Common Industry.

The most capable openly available llm to date. Web lm studio team. Web meta recently introduced their new family of large language models (llms) called llama 3. Code to generate this prompt format can be found here.

Using Google Colab And Huggingface.

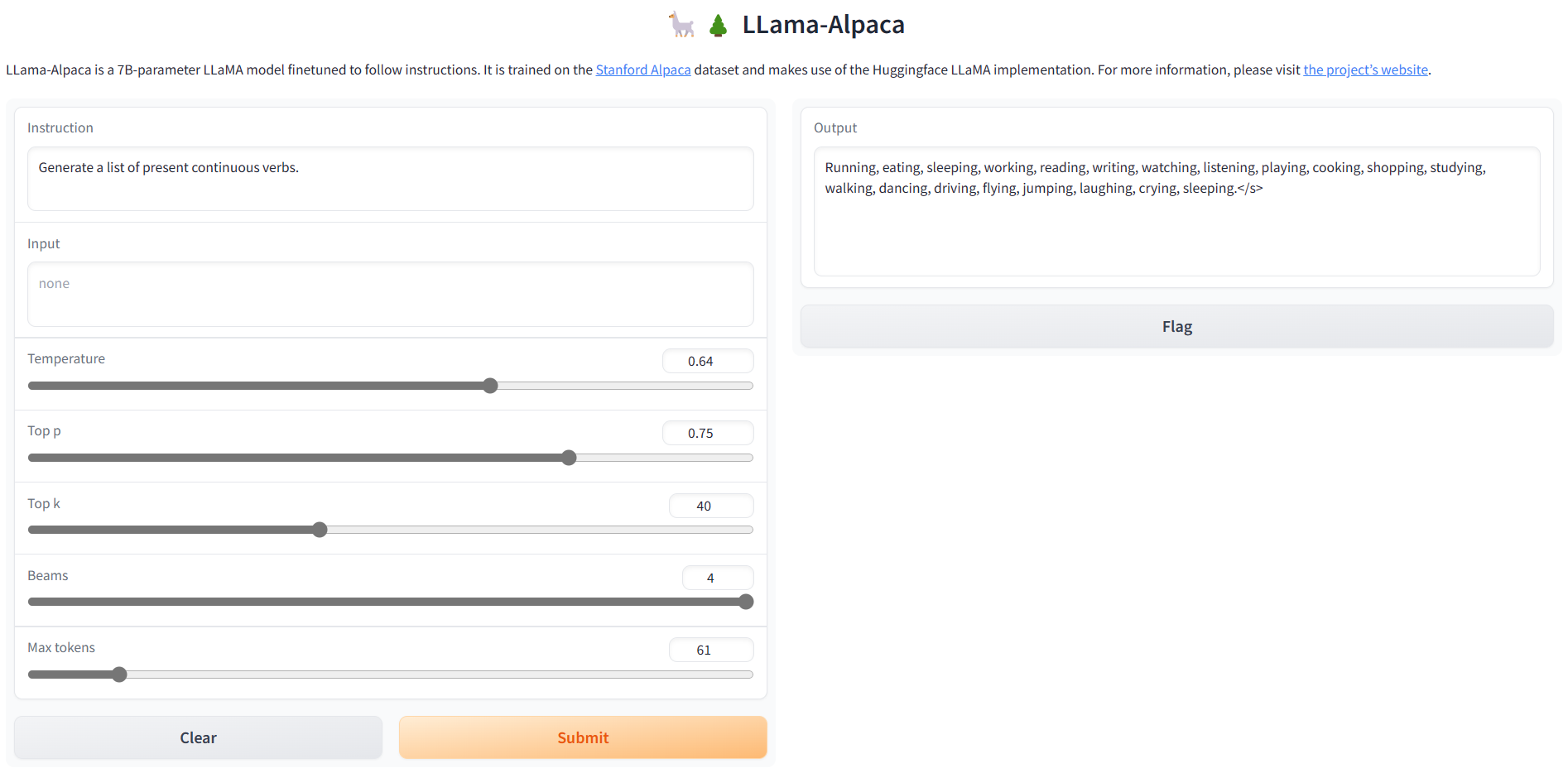

In this tutorial, we’ll cover what you need to know to get you quickly started on preparing your. It’s hampered by a tiny context window that prevents you from using it for truly. Web 其中,instruction 是用户指令,告知模型其需要完成的任务;input 是用户输入,是完成用户指令所必须的输入内容;output 是模型应该给出的输出。 即我们的核心训练目标是. Meta llama 3, a family of models developed by meta inc.

{ { If.system }}<|Start_Header_Id|>System<|End_Header_Id|> { {.System }}<|Eot_Id|> { { End }} { { If.

Web llama 3 template — special tokens. This version, with 405 billion. Web prompt engineering is a technique used in natural language processing (nlp) to improve the performance of the language model by providing them with more context and. Can anyone help in the above topics?