Code to generate this prompt format can be found here. Web yes, for optimum performance we need to apply chat template provided by meta. Web meta llama 3 instruct. The 8b model is among the most performant in its class. Web llama 3 template — special tokens.

Web the llama 3 release introduces 4 new open llm models by meta based on the llama 2 architecture. Web the llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks. Building a chatbot using llama 3. Run meta llama 3 with an api. The architecture of llama3 is similar to that of llama2, with the increase in performance primarily due to data upgrading.

Web the llama_chat_apply_template () was added in #5538, which allows developers to format the chat into text prompt. 4.6m pulls updated 7 weeks ago. Run meta llama 3 with an api. The architecture of llama3 is similar to that of llama2, with the increase in performance primarily due to data upgrading. You can continue serving llama 3 with any llama 3 quantized model, but.

By default, this function takes the template stored inside. You can continue serving llama 3 with any llama 3 quantized model, but. First let's define what's rag: Building a chatbot using llama 3. Code to generate this prompt format can be found here. The most capable openly available llm to date. Web the llama 3 release introduces 4 new open llm models by meta based on the llama 2 architecture. Using google colab and huggingface. Web the llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks. Web the eos_token is supposed to be at the end of every turn which is defined to be <|end_of_text|> in the config and <|eot_id|> in the chat_template. Web the 70b variant powers meta’s new chat website meta.ai and exhibits performance comparable to chatgpt. Web in chat, intelligence and instruction following are essential, and llama 3 has both. Web llama 3 is the latest language model from meta. The architecture of llama3 is similar to that of llama2, with the increase in performance primarily due to data upgrading. Newlines (0x0a) are part of the prompt format, for clarity in the examples, they have.

Web Meta Llama 3 Instruct.

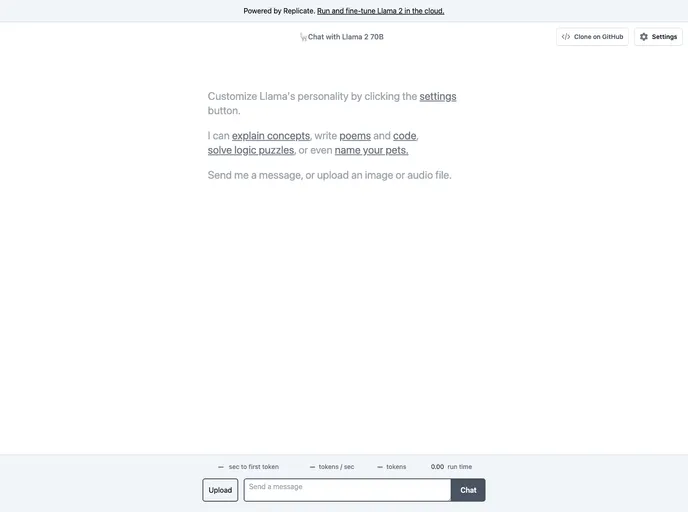

Meta llama 3 is the latest in meta’s line of. Web llama 3 template — special tokens. The 8b model is among the most performant in its class. Replicate lets you run language models in the cloud with one line of code.

It’s Hampered By A Tiny Context Window That Prevents You From Using It For Truly Large Tasks,.

By default, this function takes the template stored inside. Web the llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks. First let's define what's rag: They come in two sizes:

You Can Continue Serving Llama 3 With Any Llama 3 Quantized Model, But.

Using google colab and huggingface. Web today, we’re excited to share the first two models of the next generation of llama, meta llama 3, available for broad use. Web in chat, intelligence and instruction following are essential, and llama 3 has both. Code to generate this prompt format can be found here.

Building A Chatbot Using Llama 3.

Web yes, for optimum performance we need to apply chat template provided by meta. Web the llama 3 release introduces 4 new open llm models by meta based on the llama 2 architecture. 8b and 70b parameters, each with base (pre. Web the llama_chat_apply_template () was added in #5538, which allows developers to format the chat into text prompt.